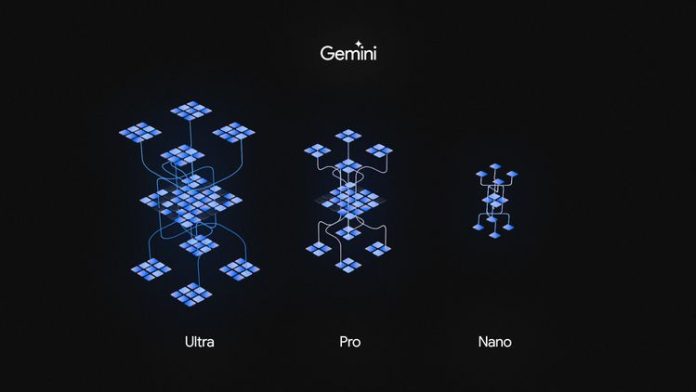

Google suddenly unveils another A.I model called Gemini, a sweet and unnecessary renaming of it’s existing model.In what appears to be a multifaceted launch featuring three distinct versions: Gemini Ultra, Gemini Pro, and Gemini Nano. However, a closer examination of Gemini Pro, the initial offering, raises questions about whether Google’s bold promises align with the delivered capabilities.

Gemini Pro, touted as a lightweight version, is set to power Bard, Google’s alternative to ChatGPT. While Sissie Hsiao, GM of Google Assistant and Bard, claims improved reasoning and understanding capabilities, the lack of independent verification and live demos during the virtual press briefing leaves room for skepticism.

Gemini Ultra, the flagship model designed for complex multimodal tasks, demonstrates prowess in understanding nuanced information across text, images, audio, and code. Google asserts superiority over OpenAI’s GPT-4, particularly in handling video and audio interactions. However, the absence of transparency regarding training datasets raises concerns about ethical considerations and potential biases within Gemini Ultra.

The rush to launch Gemini raises eyebrows, especially considering Google’s past struggles in the generative AI arena. Reports suggest challenges in handling non-English queries and a lack of understanding of Gemini Ultra’s novel capabilities, indicating potential development hurdles. The decision to withhold information about training data collection further adds to the model’s opacity.

Gemini’s benchmark comparisons with GPT-4 showcase its strengths, notably in multimodal tasks. Yet, questions about hallucination, bias, and toxicity persist, mirroring issues prevalent in other generative AI models. Google’s silence on localization efforts for Global South countries also highlights potential biases in Gemini’s language capabilities.

Gemini’s natively multimodal approach distinguishes it from GPT-4, allowing it to transcribe speech, answer questions about audio and videos, and comprehend art and photos. However, limitations in image generation, delayed availability, and uncertainties about a monetization strategy for Gemini leave room for improvement.

The Gemini launch marks Google’s attempt to reclaim ground lost to OpenAI’s ChatGPT. While progress is evident in Bard’s enhancements since its criticized launch, Gemini’s troubled development, potential reliance on third-party contractors, and unclear timelines for image generation raise concerns.

CEO Sundar Pichai emphasizes Gemini’s significance as the beginning of a new AI era at Google. The model, available in various versions, including a native offline variant for Android devices (Gemini Nano), positions itself as a transformative force across Google’s products.

In the face-off between OpenAI’s GPT-4 and Google’s Gemini, the latter claims superiority in 30 out of 32 benchmarks. Notably, Gemini’s strengths lie in its ability to comprehend video and audio, aligning with its multimodal focus. However, the real test lies in everyday user experiences, where Gemini aims to outshine GPT-4 in tasks like brainstorming, information lookup, and coding.

Gemini’s efficiency, trained on Google’s Tensor Processing Units, adds a competitive edge, offering a faster and more cost-effective alternative. The model’s integration with Google’s TPU v5p further underscores its efficiency in large-scale model training and running.

While Gemini is poised to become an integral part of Google’s ecosystem, its cautious release strategy, especially for Gemini Ultra, reflects the company’s commitment to safety and responsibility. Google acknowledges the risks associated with state-of-the-art AI systems and emphasizes a controlled beta release to identify potential issues before widespread deployment.