Google’s new video search feature represents a leap forward in how users interact with search engines by combining video analysis with natural language understanding. Here’s more detailed information on the implications and the technology behind it:

1. Combining Visual and Contextual Information

The key innovation here is Google’s ability to process a moving scene (video) in real time. Traditionally, Google’s search has been based on text input and, more recently, image input (via Google Lens). However, video search adds an extra layer of complexity. Videos contain much richer data because they capture a sequence of events, movements, and multiple objects at once. By combining this visual data with user questions, Google can provide results that are highly contextual and specific to the situation being captured.

For example:

- A user might record a video of a bird flying and ask, “What kind of bird is this?” The system will analyze the bird’s movement, physical features, and other elements in the video to deliver an answer.

- If someone is capturing a street scene and asks, “What restaurant is nearby that serves vegan food?”, Google can take into account the visual surroundings and location to provide a contextual answer based on real-time input.

2. Advanced Machine Learning and AI Integration

Behind this functionality is Google’s advanced machine learning algorithms that enable real-time video processing and natural language comprehension. This includes:

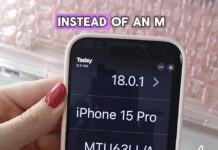

- Computer Vision: Google’s AI can identify objects, animals, landmarks, plants, and other visual elements in a video. It is trained on vast datasets to recognize patterns, shapes, and characteristics to make these identifications.

- Natural Language Processing (NLP): When users ask a question, Google’s NLP capabilities interpret the intent behind the question and relate it to the video content. This allows the system to link the visual and verbal inputs seamlessly.

- Multimodal Search Capabilities: Combining different types of input (visual, textual, and auditory) allows Google to offer more sophisticated results that are specific to the context the user is experiencing. Multimodal search capabilities bridge the gap between traditional keyword searches and real-world experiences.

3. Integration with Google Ecosystem

This feature will likely be deeply integrated into Google’s ecosystem of services and devices, including:

- Google Lens: The foundation of this technology was built in Google Lens, which already allows users to search based on images. Video search builds on these capabilities.

- Google Assistant: The video search could be combined with Google Assistant, making it easier for users to interact with the search feature by simply asking questions out loud while recording video.

- Google Maps: Video search could be especially useful when integrated with location-based services. Users could capture video of their surroundings and receive local search results, recommendations, or navigation tips tailored to what’s happening in real-time.

4. Practical Applications and Use Cases

The video search feature opens up a wide range of possibilities across different sectors and everyday scenarios:

- Retail and Shopping: Imagine walking into a store, pointing your camera at a product, and asking, “Is there a cheaper version online?” or “Where can I find this item in stock?”

- Travel and Exploration: Tourists could use the feature to capture video footage of landmarks, historical sites, or local attractions, asking questions about their significance, best times to visit, or even the history behind them.

- Education: In a classroom or at home, students could record videos of a science experiment, a natural phenomenon, or a historical event, asking questions about it and receiving explanations or related educational resources in real time.

- Home Repair and DIY Projects: Users could point their camera at a broken appliance or a piece of furniture and ask for how-to videos, part replacement guides, or step-by-step instructions for repairs.

How it works:

- Point the Camera: Users record a video of a scene or object.

- Ask a Question: They can ask a contextual question, such as “What kind of plant is this?” or “What kind of car is this?”

- Search Results: Google processes the video and the question, providing relevant information, similar to how its image search works.

5. Privacy and Ethical Considerations

As with any new technology, there are concerns related to privacy and data collection. Google’s video search feature will likely raise questions about how user data is handled, especially given that video recordings could capture sensitive information or private environments. Google will need to ensure that its privacy policies are robust, providing users with control over how their video data is used and stored.

6. The Future of Search

Google’s vision with this feature aligns with its broader goal of making search more conversational, intuitive, and multimodal. As more advanced artificial intelligence is integrated into search engines, we will likely see:

- More sophisticated AR and VR integration: Future iterations of video search may include augmented reality (AR) overlays, providing instant information as users move their cameras through a scene.

- Expanded Multilingual Capabilities: Google is also focusing on making its search tools more universally accessible by allowing users to ask questions in different languages while receiving accurate, context-specific results, no matter where they are in the world.

Overall, Google’s video search feature is a big step toward making information more accessible in real-world, dynamic situations, enhancing the user experience and allowing for new, creative ways to interact with the digital world.